Article by: Asst.Prof. Suwan Juntiwasarakij, Ph.D., MEGA Tech Senior Editor

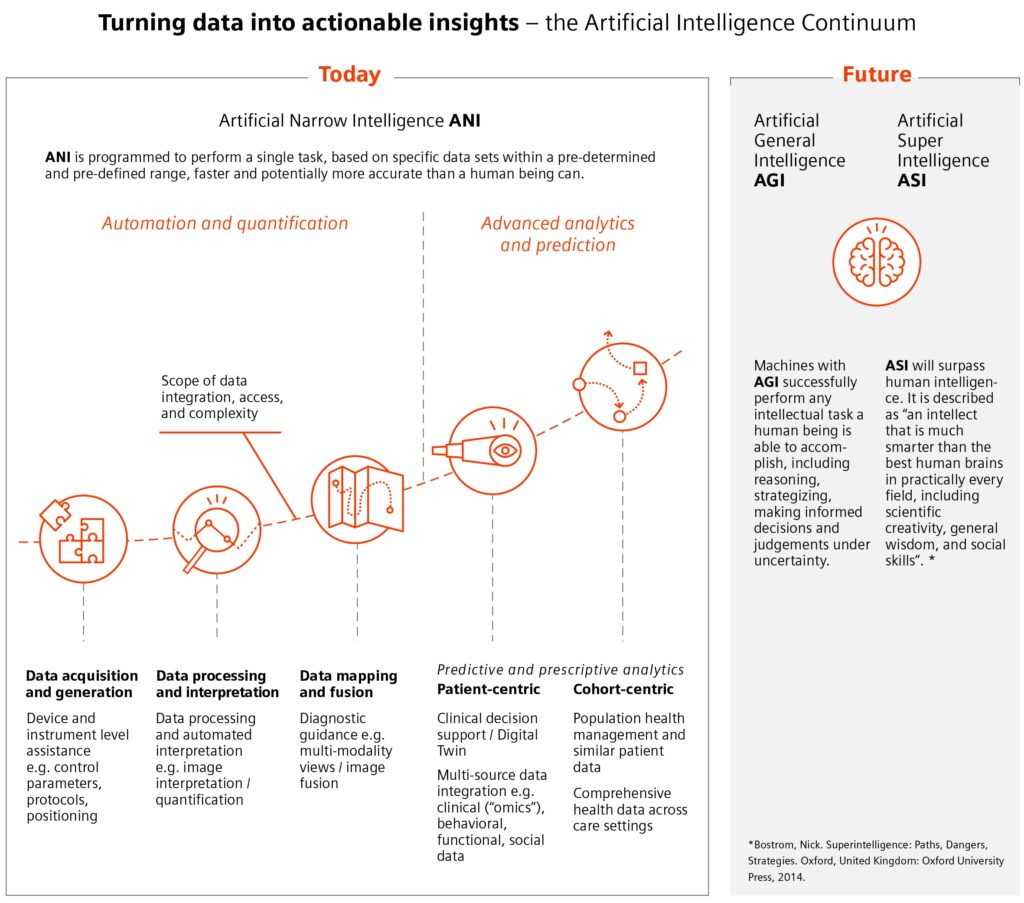

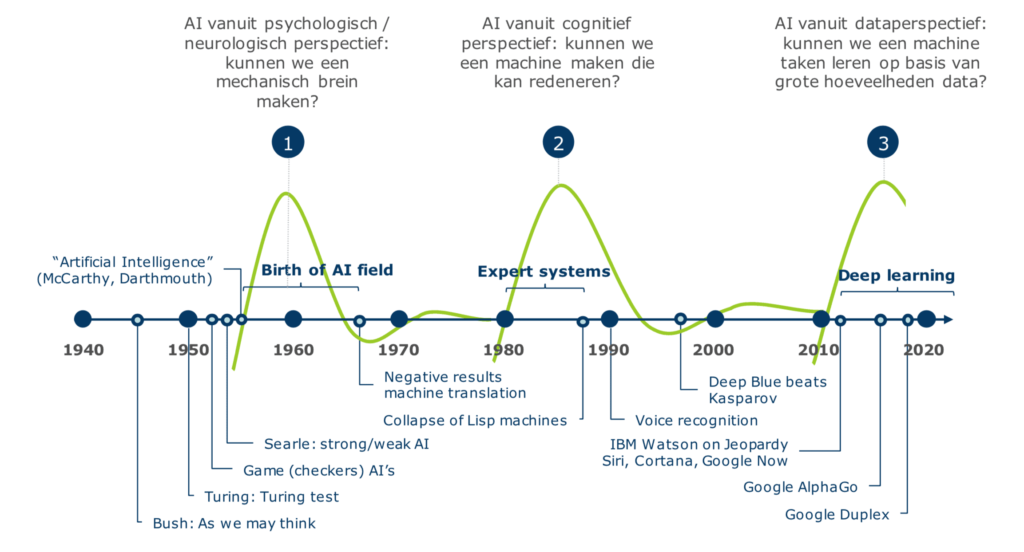

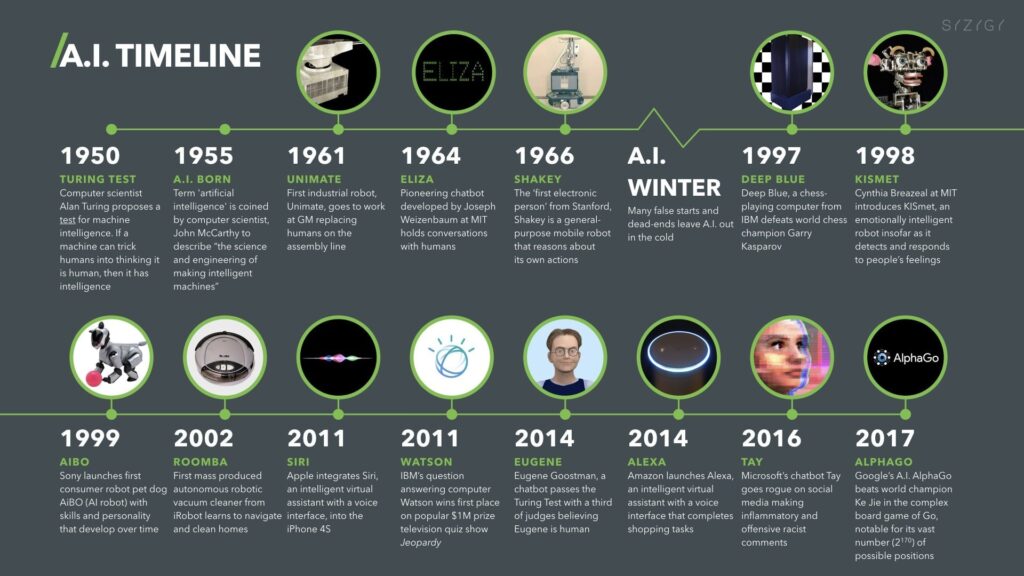

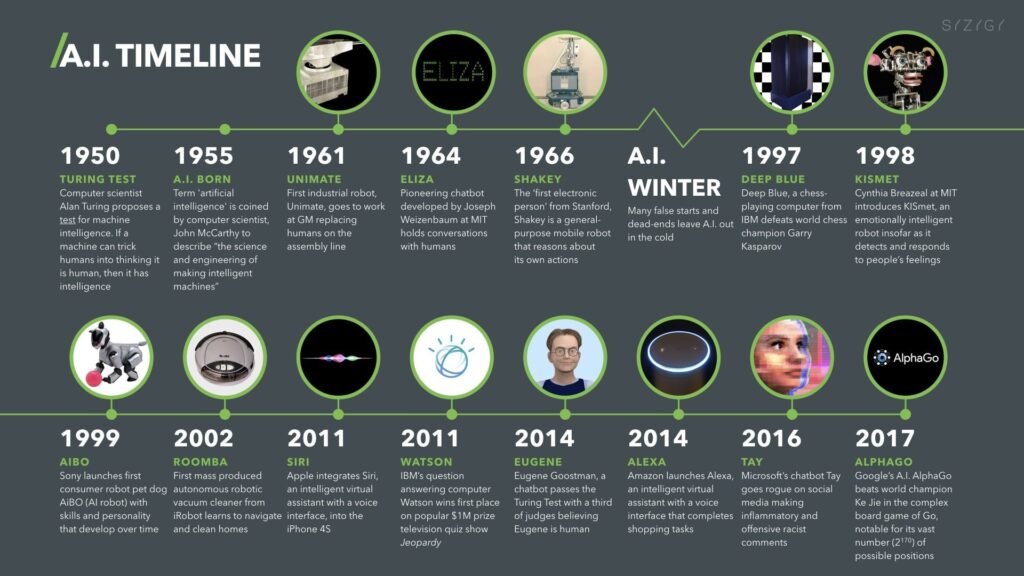

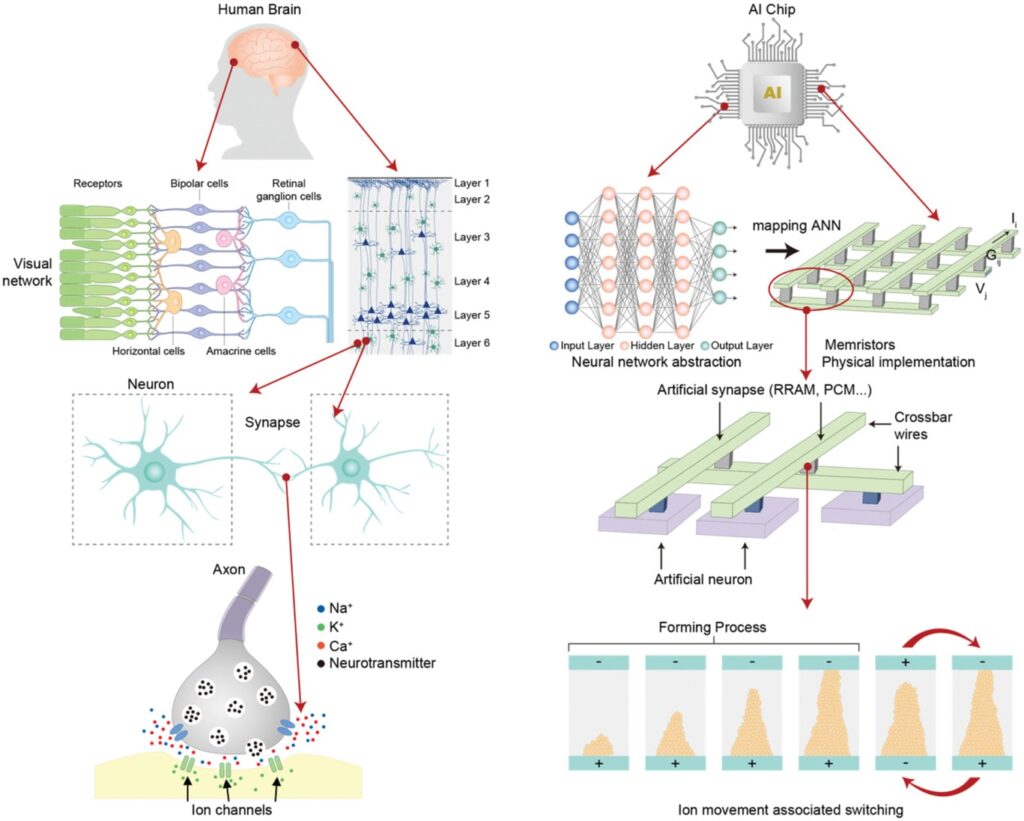

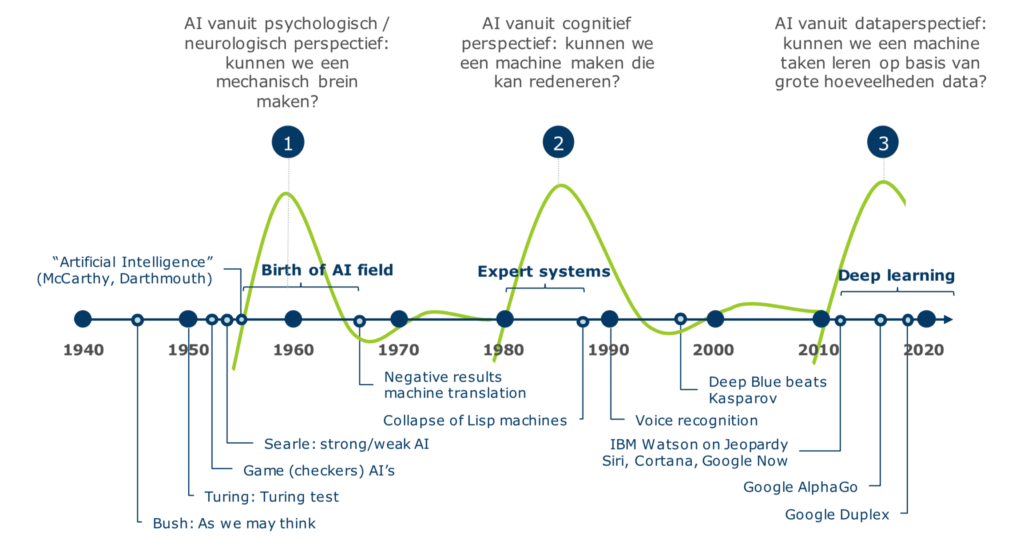

Artificial intelligence (AI) has become one of the household names and, moreover, penetrated pop culture for decades. AI discipline has officially started in 1956 at Dartmouth College, where the most eminent experts gathered to brainstorm on intelligence simulation. This happened only a few years after Asimov set his own three laws of robotics, but more relevantly after the famous paper published by Turing in 1950, where he proposes for the first time the idea of a thinking machine and the more popular Turing test to assess whether such machine shows any intelligence. Before getting started a brief history of AI, let’s be introduced to three different types of AI: narrow AI, general AI, and superintelligence AI.

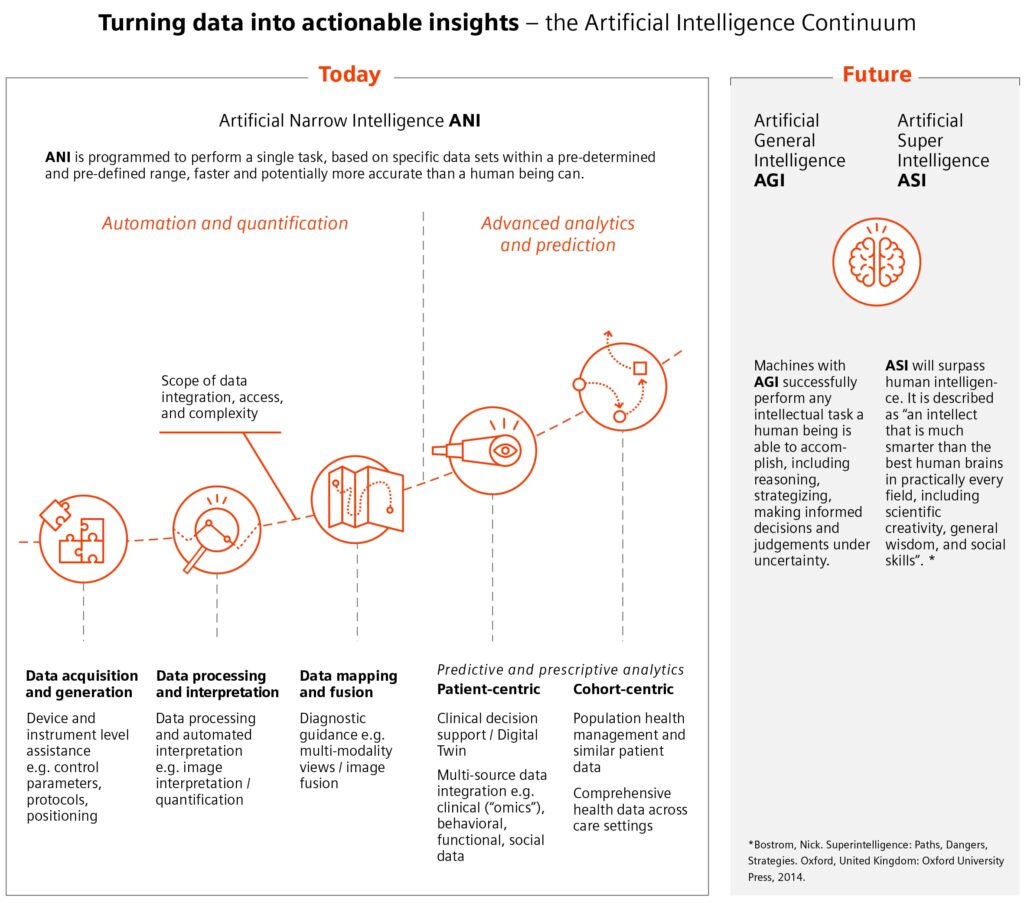

Source: SIEMENS Healthineers, adapted from

“Superintelligence: Paths, Dangers, Strategies”

ARTIFICIAL GENERAL INTELLIGENCE (AGI)

Artificial General intelligence is the capability of a machine to perform the same intellectual tasks as a human to the same standard as humans. This line of AI is also known as “Strong AI” or “Human Level AI”. In contrast to Narrow AI – AGI encompasses the general capabilities of humans, not specific narrow tasks. There has been some debate about what intelligence actually is, and what AGI should actually represent. A wide consensus was formed around 6 core values for what human-level AI would be:

1. The ability to reason, solve problems, use strategy and make decisions under uncertainty.

2. Represent knowledge.

3. The ability to plan.

4. The capability to learn.

5. The ability to communicate in a natural language.

6. Integrate all of the above towards a common goal.

ARTIFICIAL SUPERINTELLIGENCE (ASI)

ASI is a super intelligent computer that can possess an intelligence that far surpasses that of the brightest and most gifted human minds. Researchers disagree on how this can be achieved (if it can be), with some arguing that AI development will result in reasoning systems that lack human limitations; others believe humans will evolve or directly modify ourselves to incorporate ASI and achieve radically improved cognitive and physical abilities.

In reality, ASI is not as far-fetched as you may think, an AI system with the capability to reprogram and improve itself infinitely, could, in theory, do so in a rapidly increasing cycle – resulting in an intelligence explosion that would not be limited by our own genetic restrictions.

ARTIFICIAL NARROW INTELLIGENCE (ANI)

Narrow AI is a specific type of Artificial Intelligence technology that will enable computers to outperform humans in some very narrowly defined task, unlike general intelligence – narrow intelligence focuses on a single subset of abilities and looks to make strides in that spectrum.

The most culturally relevant form of narrow AI is IBM’s Watson supercomputer, which won American TV show Jeopardy!. In essence, Watson is an expert “question answering” machine that uses AI technology to mimic the cognitive capabilities of humans. Narrow AI is still the most common form of AI technology used in industry, although typically they are less glamorous than Watson. Any software that uses machine learning or data mining to make decisions can generally be considered narrow AI. Narrow AI is known as “Weak AI”, whereas general intelligence is known as “Strong AI.”

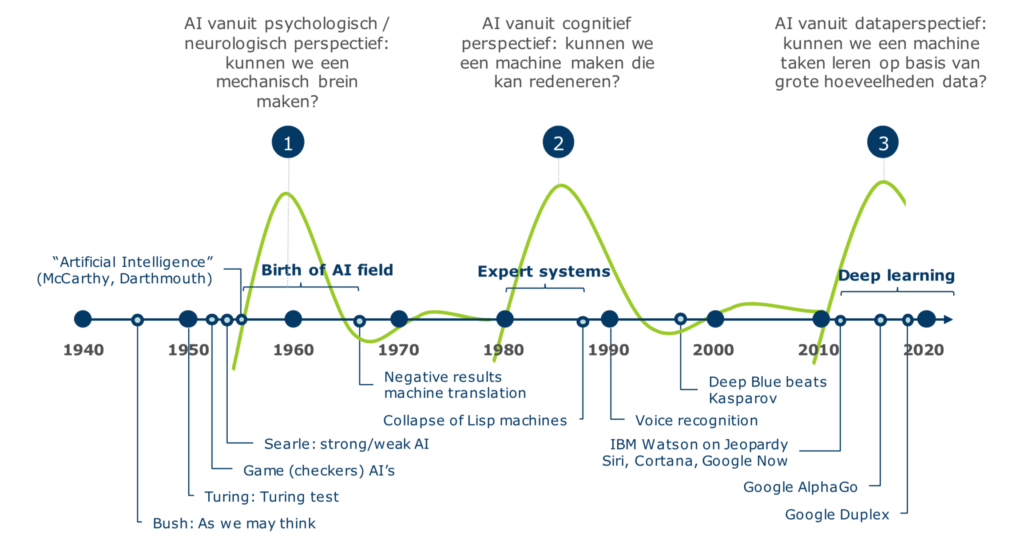

[1] THE GENESIS

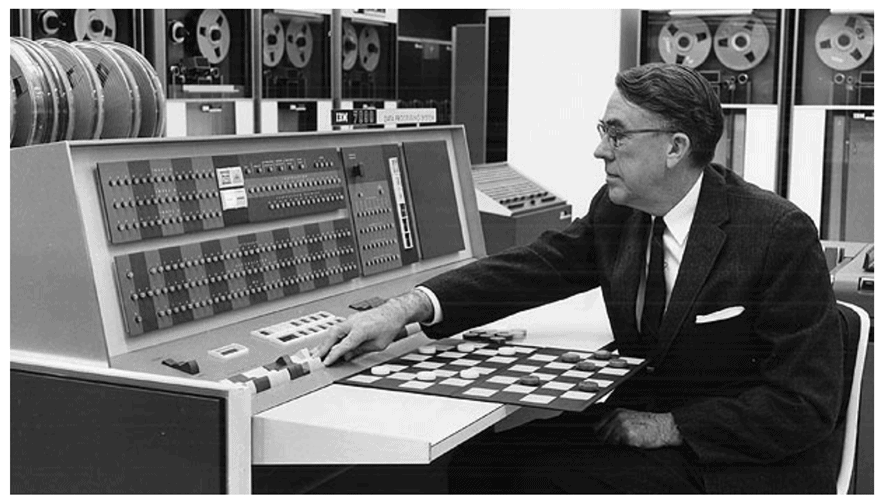

In 1950s, AI Seemed to be easily reachable, but it turned out that was not the case. At the end of the sixties, researchers realized that AI was indeed a tough field to manage, and the initial spark that brought the funding started dissipating. This phenomenon, which characterized AI along its all history, is commonly known as “AI effect,” and is made of two parts: the constant promise of a real AI coming in the following decade; and, the discounting of the AI behavior after it mastered a certain problem, redefining continuously what intelligence means.

In the United States, the reason for DARPA to fund AI research was mainly due to the idea of creating a perfect machine translator, but two consecutive events wrecked that proposal, beginning what it is going to be called later on the first AI winter. Some key events during this time are:

1950 | TURING TEST | The Turing test is a method for determine the intelligence of a machine

1955 | THE TERM AI | The term Artificial Intelligence is used for the first time.

1966 | ELIZA | Eliza is one of the first chat robots that simulate conversation as a psychotherapist.

Source: IBM

[2] THE REVIVAL

During 1970s to 1980s, a new wave of funding in UK and Japan was motivated by the introduction of “expert systems,” which basically were example of narrow AI, a specific domain application or task that gets better by ingesting further data and “learns” how to reduce the output error. These program were, in fact, able to simulate skills of human experts in specific domains, but this was enough to stimulate a new funding trend. The most active player during those years was the Japanese government, and its rush to create the fifth generation computer indirectly forced the US and UK to reinstate the funding for research on AI. This golden age did not last long, and when the funding goals were not met, a new crisis began. A key event during this time is:

1980s |EXPERT SYSTEMS |Edward Feigenbaum creates expert systems that emulate decision of human experts.

[3] THE RETURN

Toward the end of1993, MIT Cog Project lab build a humanoid robot with the Dynamic Analysis and Re-planning Tool (DART) which was funded by the US government while IBM’s DeepBlue defeated Kasparov at chess in 1997. Again, AI has now become in the spotlight. In the last two decades, much has been done in academic research, but AI has been only recently recognized as a paradigm shift.

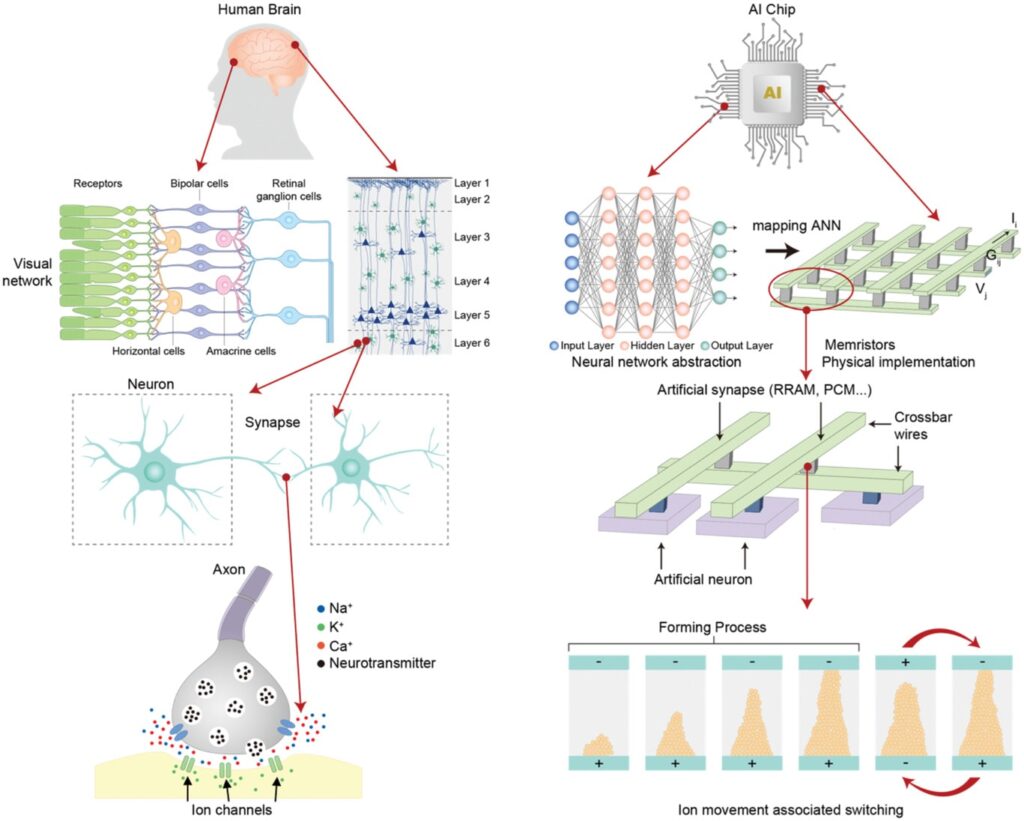

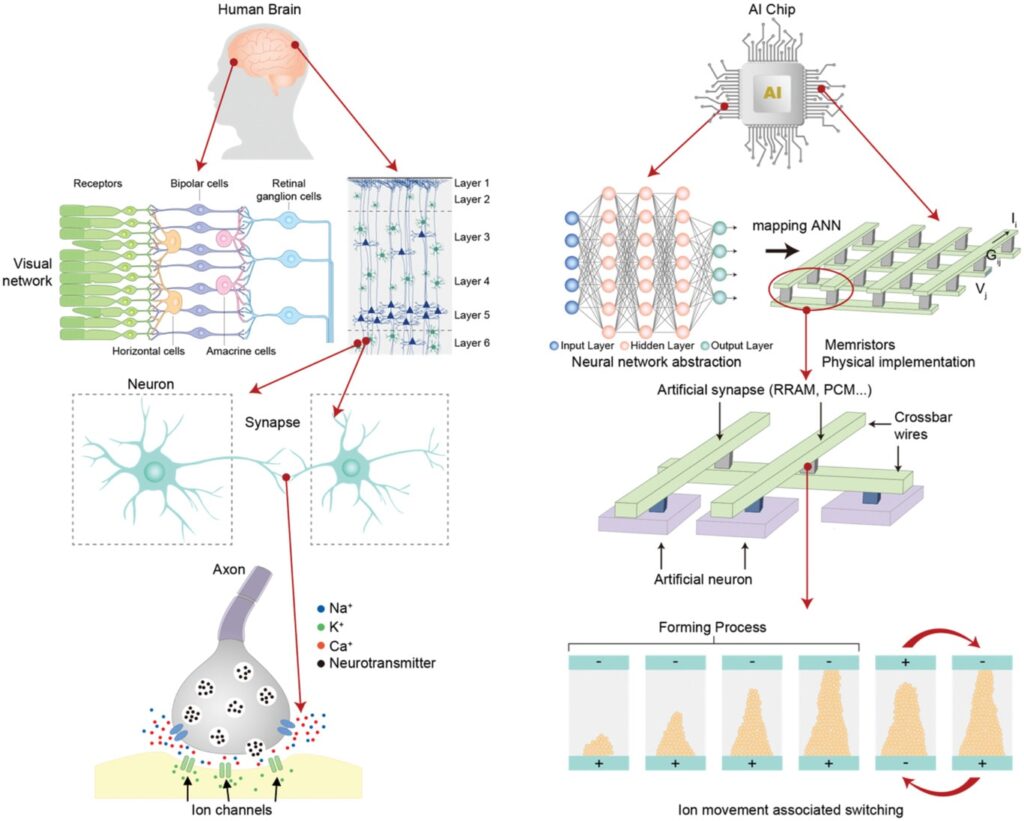

There are of course a series of causes that might bring us to understand why we are investing so much into AI nowadays, but there is specific event we think it is responsible for the last five-years trend. Regardless all the developments achieved, AI was not widely recognized until the end of 2012. On December 4, 2012, a group of researchers presented at the Neural Information Processing Systems (NIPS) conference detailed information about their convolutional neural networks that granted them the first place in the ImageNet Classification competition few weeks before. Their work improved the classification algorithm from 72% to 85% and set the use of neural networks as fundamental for artificial intelligence. In less than two years, advancements in the field brought classification in the ImageNet contest to reach an accuracy of 96%, slightly higher than the human one (about 95%).

Source: Digital Wellbeing

AI’s NEXT CHAPTER

There are then raising concerns that we might currently live the next peak phase, but also that the thrill is destined to stop soon. However, as many others, the author believes that this new era is different for three main reasons. First, big data: we finally have the bulk of data needed to feed the algorithms. Second, the technological progress: the storage ability, computational power, algorithm understanding, better and greater bandwidth, and lower technology costs allowed us to actually make the model digesting the information they needed. Lastly, the resources democratization and efficient allocation introduced by Uber and Airbnb business models, which is reflected in cloud services (i.e., Amazon Web Services) and parallel computing operated by GPUs.