Article by: Asst. Prof. Suwan Juntiwasarakij, Ph.D., Senior Editor & MEGA Tech

ปัญญาประดิษฐ์ถูกนำมาใช้งานในเกือบทุกแง่มุมของชีวิต แอปพลิเคชันที่มีปัญญาประดิษฐ์ฝั่งตัวอยู่นั้นพบได้ทั่วไปในทางการแพทย์ ธรณีวิทยา การวิเคราะห์ข้อมูลลูกค้า ยานพาหนะไร้คนขับ และแม้แต่งานศิลปะ และการใช้งานก็พัฒนาอย่างต่อเนื่อง ปัญญาประดิษฐ์นี้เองจะเป็นผู้พลิกเกมห่วงโซ่คุณค่าในทุกระดับสำหรับผู้ผลิต ไม่ว่าจะเป็น ระบบอัตโนมัติโดยตรง, การบำรุงรักษาเชิงคาดการณ์, เวลาหยุดทำงานลดลง, การผลิตตลอด 24 ชั่วโมงทุกวัน, ความปลอดภัยที่ดีขึ้น, ค่าใช้จ่ายในการดำเนินงานที่ลดลง, ประสิทธิภาพที่มากขึ้น, การควบคุมคุณภาพ และการตัดสินใจที่รวดเร็วขึ้น สิ่งเหล่านี้เป็นเพียงรางวัลบางส่วน สำหรับองค์กรที่ยอมรับการเปลี่ยนแปลงและนำปัญญาประดิษฐ์ไปใช้จนเกิดความเชี่ยวชาญจะได้รับ

การนำปัญญาประดิษฐ์มาใช้ในโรงงานการผลิตกำลังเป็นที่นิยมในหมู่ผู้ผลิต ผลวิจัยของ Capgemini พบว่า ผู้ผลิตในยุโรปมากกว่าครึ่ง (51%) กำลังสร้างระบบงานปัญญาประดิษฐ์ขึ้นมาใช้งาน โดยมีญี่ปุ่น (30%) และสหรัฐอเมริกา (28%) รองลงมาเป็นอันดับสองและสาม การศึกษาเดียวกันยังเผยให้เห็นว่า รูปแบบการใช้งานปัญญาประดิษฐ์ที่ได้รับความนิยมสูงสุดในการผลิต ได้แก่ การปรับปรุงการบำรุงรักษา (29%) และ การปรับปรุงคุณภาพ (27%) ความนิยมที่เกิดขึ้นนี้เป็นผลจากข้อมูลที่ได้จากกระบวนการผลิตซึ่งเป็นรูปแบบที่สอดคล้องต่อการนำไปใช้งานของระบบปัญญาประดิษฐ์และการเรียนรู้ของเครื่องจักร (แมชชีนเลิร์นนิ่ง) กระบวนการผลิตแฝงไปด้วยข้อมูลเชิงวิเคราะห์จำนวนซึ่งเหมาะสำหรับการวิเคราะห์โดยปัญญาประดิษฐ์ แม้จะเป็นเรื่องยากสำหรับมนุษย์ที่จะทำความเข้าใจตัวแปรจำนวนเป็นตัวที่ส่งผลต่อกระบวนการผลิต แต่สำหรับแบบจำลองที่สร้างขึ้นโดยระบบการเรียนรู้ของเครื่องจักรแล้ว จะสามารถทำนายผลกระทบอันซับซ้อนซึ่งเกิดจากตัวแปรแต่ละตัวได้อย่างง่ายดาย แต่อย่างไรก็ดีในอุตสาหกรรมอื่นมีเรื่องของภาษาและอารมณ์มาเกี่ยวข้องนั้น พบว่าปัญญาจักรกลนี้ยังมีความสามารถในการทำงานต่ำกว่ามนุษย์

การศึกษาของ PwC พบว่าประโยชน์เชิงเศรษฐศาสตร์ของปัญญาประดิษฐ์เป็นผลจาก (1) ผลผลิตที่เพิ่มขึ้นจากภาคธุรกิจที่ใช้ระบบอัตโนมัติและนำเทคโนโลยีปัญญาประดิษฐ์ประเภทต่าง ๆ มาเสริมสร้างและสนับสนุนแรงงานมนุษย์ และ (2) ความต้องการของผู้บริโภคที่เพิ่มขึ้นอันเป็นผลจากความหลากหลายของสินค้าและบริการที่มีสามารถปรับแต่งตามความต้องการได้และยังมีคุณภาพสูง นอกจากเทคโนโลยีดังกล่าวนี้ได้นำไปใช้ในงานที่มีความสำคัญทางธุรกิจแล้ว แต่บริษัทห้างร้านต่าง ๆ ยังคำนึงถึงความสำคัญในการนำไปใช้กับกระบวนการทางการผลิตมากขึ้นเรื่อย ๆ ไม่ว่าจะเป็น การพัฒนาการผลิต วิศวกรรม การประกอบ และการทดสอบคุณภาพ ทั้งนี้การผลิตเป็นหนึ่งในภาคส่วนที่สำคัญที่สุดในระบบเศรษฐกิจของโลก คิดเป็น 17% ของ GDP โลกในปี 2021 และสร้างผลผลิตที่มีมูลค่าถึง 16.5 ล้านล้านดอลลาร์ทั่วโลก

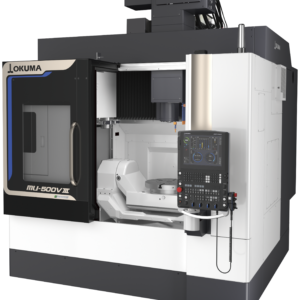

ตลาดการผลิตอัจฉริยะทั่วโลกในปี 2022 มีมูลค่า 97.6 พันล้านเหรียญสหรัฐ และคาดว่าจะมีมูลค่าถึง 228.3 พันล้านเหรียญสหรัฐภายในปี 2027 โดยคาดว่าจะเติบโตที่ CAGR 18.5% ระหว่างปี 2022 ถึง 2027 นอกจากนี้ การบำรุงรักษาเชิงคาดการณ์เป็นเคสหนึ่งเป็นหนึ่งในการประยุกต์ใช้งานในการผลิตที่สำคัญ ๆ ซึ่ง PWC รายงานว่าการบำรุงรักษาเชิงคาดการณ์จะเป็นหนึ่งในเทคโนโลยีการเรียนรู้ของเครื่องจักรที่กำลังเติบโตที่สำคัญที่สุดในอุตสาหกรรมการผลิต โดยจะเพิ่มมูลค่าตลาดถึง 38% ในช่วงปี 2020 ถึง 2025 การบำรุงรักษาที่ไม่มีแผนการรองรับนั้นสามารถก่อเกิดความเสียหายอย่างร้ายแรงแก่ธุรกิจ การได้ล่วงรู้ล่วงหน้าว่าความล้มเหลวจะเกิดกับอุปกรณ์ใด จะช่วยให้ผู้ผลิตหลีกเลี่ยงความสูญเสียจากอุปกรณ์หยุดทำงานและความสูญเปล่าในการลงทุนได้ รายงานจาก Deloitte พบว่า การบำรุงรักษาเชิงคาดการณ์จะช่วยเพิ่มผลผลิตได้ 25% ลดการสูญสียเชิงการผลิตได้ 70% ลดต้นทุนการบำรุงรักษาลง 25% และเพิ่มประสิทธิภาพการทำงานของอุปกรณ์ได้ 10 ถึง 20% โดยรวมแล้ว ค่าใช้จ่ายในการบำรุงรักษาจะลดลง 5 ถึง 10% และลดเวลาการวางแผนการบำรุงรักษาได้ถึง 20 ถึง 50%

เมื่อธุรกิจได้ตัดสินใจใช้การบำรุงรักษาเชิงคาดการณ์แล้ว การเดินทาง (สู่การใช้การบำรุงรักษาเชิงคาดการณ์อย่างเต็มตัว) จะเริ่มต้นด้วยการเรียนรู้ข้อมูลเชิงลึกใหม่ๆ ที่มีอยู่ตามเส้นทาง แม้ว่าข้อมูลเหล่านั้นจะยังแม่นยำที่จะถึงระดับของการทำนายความล้มเหลว แต่สิ่งที่ได้รับนั้นนำไปสู่ผลลัพธ์ที่ดีขึ้นแน่นอนลดความสูญเสียการหยุดทำงานของเครื่องจักรและเพิ่มผลผลิต ในขั้นที่ 0 การทำความเข้าใจการประมวลผลว่าใช้ข้อมูลชนิดใดคือวัตถุประสงค์หลัก ในขั้นที่ 1 จะต้องมีข้อมูลที่สร้างจากตัวเซ็นเซอร์ส่งออกมาอย่างสม่ำเสมอ ข้อมูลทั้งหมดจากเซ็นเซอร์ทุกตัวจะไหลมารวมกันบนแพลตฟอร์ม ผู้เชี่ยวชาญสามารถระบุได้ว่าพารามิเตอร์ใดบ่งชี้ถึงความล้มเหลวที่จะเกิดขึ้นอันใกล้ด้วยเครื่องมืออันทรงพลังที่เรียกว่า “วิชวลไลเซชัน” ในขั้นที่ 2 ความสำคัญอยู่ที่ ผู้เชี่ยวชาญที่เป็นมนุษย์ และ ข้อมูลจำพวกตัวแปรจากกระบวนการผลิตนั้น ซึ่งจะสามารถคัดกรองประกอบสร้างกฎหลักการที่ลดทอนความซับซ้อนลง แต่สามารถนำไปใช้เพื่อการป้องกันความสูญเสียจากความล้มเหลวของอุปรกณ์เครื่องจักร

อย่างไรก็ตาม การตรวจจับความผิดปกติต้องการข้อมูลจากเซ็นเซอร์ที่มีปริมาณมากเพียงพอ ระบุเป็นข้อมูลเชิงปริมาณขั้นต่ำ คือ ความถี่การส่งข้อมูลต่อหนึ่งหน่วยเวลา ข้อมูลนี้ช่วยให้เราสามารถกำหนดบรรทัดฐานสำหรับกระบวนการนั้น ๆ การแจ้งเตือนไปยังผู้ปฏิบัติงานจะเกิดขึ้นเมื่อข้อมูลที่ได้รับผิดไปจากตัวกำหนดบรรทัดฐาน ซึ่งผู้ปฏิบัติงานจะต้องตัดสินใจว่าเกิดความล้มเหลวจริงหรือไม่ ข้อมูลความล้มเหลวจำนวนมากพร้อมด้วยบันทึกโดยละเอียดมีความสำคัญต่อการแก้ไขและป้องกันสถานการณ์ที่ไม่พึ่งประสงค์ หากนำการวิเคราะห์ขั้นสูงมาร่วมด้วย ก็จะได้สามารถตรวจพบความล้มเหลวได้ทันควันอีกทั้งมีความน่าเชื่อถือ และในการการวิเคราะห์สาเหตุนั้น จะต้องบันทึกทั้งวิธีแก้ไขความล้มเหลวที่สำเร็จและไม่สำเร็จ สิ่งเหล่าวนี้จะทำให้ตีกรอบสาเหตุของความสูญเสียได้แคบลง เพื่อนำเสนอแนวทางดำเนินการที่เหมาะสม